update: The Python code for this TwitterScraper can be forked/cloned from my Git repository.

———–

For most people, the most interesting part of the previous post, will be the final results. But for the ones who would like to try something similar or the ones who are also curious about the technical part, I will explain the methods and techniques I used (mostly webscraping with Beautifulsoup4) to collect a few million Tweets.

Setting up Python and its relevant packages

I used Python as the programming language to collect all the relevant data, because I have prior experience with it, but the same techniques should be applicable with other languages. In case you do not have any experience with Python, but still would like to use it, I can recommend the Coursera Python Course, Codeacademy Python Course or the Learn Python the Hard Way book. It might also be a good idea to get a basic understanding of how web APIs work.

The Python Packages you will need are packages like Numpy & SciPy for basic calculations, OAuth2 for authorization towards the Twitter, tweepy because it provides an user-friendly wrapper of the Twitter API, pymongo for interacting with the MongoDB database from Python (if that is the db you will use).

If you do not have Python and/or some of its packages, the easiest way to install it is; on linux install pip (Python package manager) first and then install any of the missing packages with pip install <package>. For Windows I recommend to install Anaconda (which has a lot of built-in packages including pip) first and then IPython. The missing packages can then be installed with the same command.

Getting your twitter credentials;

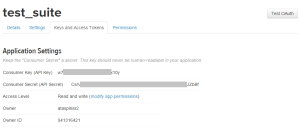

Twitter is using OAuth2 for authorization and authentication, so whether you are using tweepy to access the Twitter stream or some other method, make sure you have installed the OAuth2 package. After you have installed OAuth2 it is time to get your log-in credentials from https://apps.twitter.com. Log in and click on create a new app, fill in the application details and copy your Consumer Secret and your Access Token Secret to some text file.

Accessing Twitter with its API

I recommend you to use tweepy [1], which is an open-source Twitter API wrapper, making it easy to access twitter. If you are using a programming language other than Python or if you don’t feel like using tweepy, you can look at the Twitter API documentation and find other means of accessing Twitter.

There are two ways in which you can mine for tweets; with the Streaming API or with the Search Api. The main difference (for an overview, click here) between them is that with Search you can mine for tweets posted in the past while Streaming goes forward in time and captures tweets as they are posted.

It is also important to take the rate-limit for both API’s into account:

- With the Search API you can only sent 180 Requests every 15 min timeframe. With a maximum number of 100 tweets per Request this means you can mine for 4 x 180 x 100 = 72.000 tweets per hour. One way to increase number of tweets is to authenticate as an application instead of an user. This will increase the rate-limit from 180 Requests to 450 Requests while reducing some of the possibilities you had as an user.

- With the Streaming API you can collect all tweets containing your keyword(s), up to 1 % of the total tweets currently being posted on twitter. So if your keyword is very general and more than 1 % of the tweets contain this term, you will not get all of the tweets containing this term. The obvious solution is to make your query more specific and combining multiple keywords. At the moment 500+ million tweets are posted a day, so 1 % of all tweets still gives you 1+ million tweets a day.

Which one should you use?

Obviously any prediction about the future should be based on tweets coming from the Streaming API, but if you need some data to fine-tune your model you can use the Search API to collect tweets from the past seven days – it does not go further back – (Twitter documentation). However, since there are around 500+ million tweets posted every day, the past seven days should provide you with enough data to get you started. However, if you need tweets older than 7 days, webscraping might be a good alternative, since a search at twitter.com does return old tweets.

Using the tweepy package for Streaming Twitter messages is pretty straight forward. There even is an code sample on the github page of tweepy. So all you need to do is install tweepy/clone the github repository and fill in the search terms in the relevant part of search.py.

With tweepy you can also search for Twitter messages (not older than 7 days). the code sample below shows how it is done.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

import tweepy access_token = "" access_token_secret = "" consumer_key = "" consumer_secret = "" auth = tweepy.OAuthHandler(consumer_key, consumer_secret) auth.set_acces_token(access_token, access_token_secret) api = tweepy.API(auth) for tweet in tweepy.Cursor(api.search, q="Tayyip%20Erdogan", lang="tr").items(): print tweet |

These two samples of code show again the advantages of tweepy; it makes it really easy to access the Twitter API for Python and as a result of this is probably the most popular Python Twitter package. But because it is using the Twitter API, it is also subject to the limitations posed by Twitter; the rate-limit and the fact that you can not search for twitter messages older than 7 days. Since I needed data from the previous elections, this posed a serious problem for me and I had to use web-scraping to collect Twitter messages from May.

Using BeautifulSoup4 to scrape for tweets

There are some pro’s and cons with using web scraping for the collection of twitter data (instead of their API). One of most important pro’s are that there is no rate-limit on the website so you can collect more tweets than the limit which is imposed on the Twitter API. Furthermore, you can also mine for tweets older than seven days :).

If we want to scrape twitter.com with BeautifulSoup we need to send a Request and extract the relevant information from the response. The Search API documentation gives a nice overview of the relevant parameters you can use in your query.

For example, if you want to request all tweets containing ‘akparti’ from 01 May 2015 until 05 June 2015, written in Turkish, you can do that with the following url

https://twitter.com/search?q=akparti%20since%3A2015-05-01%20until%3A2015-06-05&lang=tr

The tweets on this page can easily be scraped with the Python module BeautifulSoup.

|

1 2 3 4 5 6 7 |

import urllib2 from bs4 import BeautifulSoup url = "https://twitter.com/search?q=akparti%20since%3A2015-05-01%20until%3A2015-06-05&amp;amp;amp;amp;amp;lang=tr" response = urllib2.urlopen(url) html = response.read() soup = BeautifulSoup(html) |

‘soup’ now contains the entire contents of the html page. Now, lets look at how we can extract the more specific elements containing only the tweet-text, tweet-timestamp or user. With the developer tools of Chrome (right-click on the tweet and then ‘Inspect element’) you can see which elements contain the desired contents and scrape them by their class-name.

We can see that an <li> element with class ‘ js-stream-item‘ contains the entire contents of the tweet, a <p> with class ‘tweet-text‘ contains the text and the user is contained in a <span> with class ‘username‘. This gives us enough information to extract these with BeautifulSoup:

|

1 2 3 4 5 6 7 8 9 10 |

tweets = soup.find_all('li','js-stream-item') for tweet in tweets: if tweet.find('p','tweet-text'): tweet_user = tweet.find('span','username').text tweet_text = tweet.find('p','tweet-text').text.encode('utf8') tweet_id = tweet['data-item-id'] timestamp = tweet.find('a','tweet-timestamp')['title'] tweet_timestamp = dt.datetime.strptime(timestamp, '%H:%M - %d %b %Y') else: continue |

Notes:

- The ‘text‘ after

tweet.find('span','username')is necessary to extract only the visible text excluding all html elements. - Since the tweets are written in Turkish they probably contain non-standard characters which are not support, so it is necessary to encode them as utf8.

- The date in the twitter message is written in human readable format. To convert it to a datetime format which can further be used by Python we need to use datetime’s strptime method. To do this we need to additionally import the datetime and locale package[code language=”Python”]import datetime as dt

import locale

locale.setlocale(locale.LC_ALL,’turkish’)[/code]

Scraping pages with infinite scroll:

In principle this should be enough to scrape all of the twitter messages containing the keyword ‘akparti’ within the specified dates. However the website of twitter uses infinite scroll, which means it initially shows only ~20 tweets and keeps loading more tweets as you scroll down. So a single Request will only get you the initial 20 tweets.

One of the most commenly used solution for scraping pages with infinite scroll is to use Selenium. Selenium can open a web-browser and scroll down to the bottom of the page (see stackoverflow) after which you can scrape the page. I do not recommend you to use this. The biggest disadvantage of Selenium is that it physically opens up a browser and loads all of the tweets. Nowadays tweets can also contain videos and images, and loading these in your web-browser will be slower than simply loading the source code of the page. If you are planning on scraping thousands or millions of tweets it will be a very time consuming and a memory intensive process.

There must be another way!

Lets open up Chrome developer tools again (Ctrl + Shift + I) again to find a solution for this problem. Under the Network tab, you can see the GET and POST requests which are being sent in the instant that you have reached the bottom and the page is being filled with more tweets.

In our case this is a GET request which looks like

https://twitter.com/i/search/timeline?vertical=default&q=Erdogan%20since%3A2015-05-01%20until%3A2015-06-06&include_available_features=1&include_entities=1&lang=tr&last_note_ts=2088&max_position=TWEET-606971359399411712-606973762026803200&reset_error_state=false

The interesting parameter in this request is the parameter

max_position=TWEET-606971359399411712-606973762026803200.

Here the first digit is the tweet-id of the first tweet on the page and the second digit is of the last tweet. Scrolling down to the bottom again we can see that this parameter has become

max_position=TWEET-606967763807182849-606973762026803200.

So every time new tweets a loaded on the page the get request above is sent with the id of the first and last tweet on the page.

At this point I hope it has become clear what needs to be done to scrape all tweets from the twitter page:

1. Read the response of the ‘regular’ Twitter URL with BeautifulSoup. Extract the information you need and save it to a file/database. Separately save the tweet-id of the first and last tweet on the page.

2. Construct the above GET Request where you have filled in the tweet-id of the first and last tweet in their corresponding places. Read the response of this Request with BeautifulSoup and update the tweet-id of the last tweet.

3. Repeat step 2 until you get a response with no more new tweets.

——–

[1] Here are some good documents to get started with tweepy:

http://docs.tweepy.org/en/latest/getting_started.html

http://adilmoujahid.com/posts/2014/07/twitter-analytics/

http://pythoncentral.io/introduction-to-tweepy-twitter-for-python/

http://pythonprogramming.net/twitter-api-streaming-tweets-python-tutorial/

http://www.dototot.com/how-to-write-a-twitter-bot-with-python-and-tweepy/

36 gedachten over “Collecting Data from Twitter”

Hi Ahmet,

I have faced similar challenges when looking for tweets. When doing my master thesis I needed tweets back in the past for specific dates. On IBM Bluemix they provide a service which is called “Insights for Twitter” and there you can get tweets back to April 2014.

Yes, it an interesting tool, but a bit too expensive if you ask me.

I plan to place all of the code on my github account once I have finished with these text-analytics / sentiment analysis blog series. I hope it will help people to get data from Twitter and do Sentiment Analysis and other Text Classification on these collected Tweets.

I understand you are already finished with your master thesis, but do you think it would be usefull for other students?

As far as I have understood it is good for use cases where one does only require tweet text and information like username or date.

Once you need metadata like geospatial or friends/followers etc. then one should look for alternatives, right?

There will also be functionality to retrieve information from an user’s profile page. This will not only be usefull to retrieve the location information from scraped Tweets (which do not have geolocation information), but also to retrieve location information from Tweets which were collected with Twitter’s Search or Stream API (because not all of them have the geolocation information filled in).

Of course it will also contain the number of followers etc.

Nice, i particularly like the “max_position” trick !

Do yu think there is a solution for scrapping this way followers and friends (because yu have to be loggued to see these information…) ? Is it possible with an registered application ?

Hello Ahmet,

This is smartest idea I read after long time search on Google looking for solution and finally found

your! I made some changes, but can you explain more further about infinite scroll method? I found max_position and everything, but I didn’t understood how to continue on?

Thank you!

Thanks for your reaction Uros,

Twitter only loads 20 Tweets by default and whenever you reach the bottom of the page, it sends another GET request to its server which loads 20 new Tweets to the current page. This keeps on going forever or until you run out of Tweets for the currently chosen keywords.

So you can collect all of the tweets in a two step process:

1. First send out a regular GET request and from the loaded page collect the 20 tweets. At the same time, also collect the tweet-id of the first Tweet on the page and of the last tweet on the page.

2. With the these two tweet-id’s you can form another GET request which a new set of 20 Tweets. The best way to do this is in a while loop, which keeps repeating itself until you run out of tweets or have reach the number of Tweets you want to collect. This while loop will keep updating URL with the value of the tweet-id of the last tweet and send out a new GET request each time.

I will upload the code to my GitHub account when I have some more time. For now, I will sent it to you by email.

Good Luck!

Ahmet

hello

Clear explanation, as i have subscribe to this post, could you post a comment when uploading the code on github, i’m also very interested.

thx a lot & nice day

What’s up, just wanted to tell you, I liked this

article. It was helpful. Keep on posting!

I like looking through an article that will make people think.

Also, thank you for allowing for me to comment!

Hello Ahmet, when i try to fetch tweets with the first way, no data is fetched. Actually, when i use tweepy.Cursor(api.user_timeline) no data is fetched too. Howewer, if i use tweepy.Cursor(api.home_timeline) i can fetch tweets. Have u got any advice for me to solve this issue? Your answer will be appreciated. Thx in advance.

Hi Tolga,

Unfortunately, I have not used Tweepy for a long time, so I can not help you with that.

But I have put my script for scraping tweets on github. See https://github.com/taspinar/TwitterScraper

I will still modify the code to make it more Object Oriented and add some install/usage instructions, but for now you can use it to scrape for tweets.

Hello ataspinar,

I used your script to collect more tweets and I rewrote few code lines in order to skip error when a username, fullname is not found. I just only get text and the code run perfectly but after some time I get a pop-up message saying “Python has stopped working” … Do you have any idea why this message appear?

Hi Camilo,

This sounds like it is related to the environment you are running Python in or the python version you are using, cause I have never experienced this error when running it via Anaconda (as described in this post). Could you give more background information?

In any case, I made a small update in the script, maybe that works better for you.

Hi Ahmet,

I couldn’t use your script that where we write our twitter access key and where the results are stored. Could you please write these step by step.

Thanks.

Hi, this was great and v helpful for what I want to do. Have got all the way up to the last step like Uros above.

But when I get to the max_position part, do I just copy the address into the url in my python script? I have tried this and then when I print the results to csv it is blank? Could you please help?

Thanks!

Hi Zoe,

The code on this page is already quiet outdated. Please have a look at the twitterscraper tool on my GitHub page:

https://github.com/taspinar/twitterscraper

Good luck!

Ahmet

Hi Ahmet,

Thanks for sharing your work on github. my question might sound silly, but I was able to use TwitterScraper successfully (with the command twitterscraper “” –output=tweets.json” but I am unable to retrieve my json file. Unless I am missing something, my eyes cannot playing a trick on me.. Help! Thanks!

Hi K,

Could you create an issue on the GitHub page? That makes it easier for me to keep track of everything.

Thanks,

Ahmet

Hi Ahmet

I searched the entire web to find ways to extract data from twitter ,and your article is the best i have read and the most efficient one too. Thanks a lot for sharing I had a great experience learning from it and implementing it .Thanks again 🙂

Hello Ahmet.

would you please show me how i can modify the codes you posted on git repository in order to collect only tweets in English. I am working on my studies research. please help. thank you.

Hi,

In order to collect tweets written in the English language, you can add “lang%3Aen” to the query.

The locations or geonames are not been extracted as for the same code that is specified. Though it is best solution I have came across for fetching Historical twitter data. Thanks for that. But, Main thing what i will be needing is the locations of the tweets. I am not able to filter out locations as I am writing [print tweet.text, “:”, tweet.user, “:”, print.coordinates]. The coordinates can’t be fetched and displays an error. While it works well for text and username attributes.

Hi Aakash,

The Twitter website does not contain information about the geolocation of the tweets and therefore this can not be extracted from the tweets. There are two things you can do;

1. If youre looking for tweets from a specific location (city / country) you can include this information in the query. Then you are sure that all of the tweets you have extracted are from that region.

2. You can retrieve the location of the users (not the tweets) from their profile page. Not all users include this information in their profile but you will be able to retrieve it for about 25% of the users.

I’m not quite sure if this is a relevant question, but are there any legal concerns with twitter scraping particularly in the USA?

Hi Doghr207,

I never did extensive research about any legal concerns, but I can not imagine there is, since we are scraping information which is publicly available. My only advice would be to not do it at a disruptive rate, and do it for research purposes instead of commercial ones.

The explanation given here given by Pablo Hoffman seems to have answered your question pretty good.

Hi,

Just landed on this page. I’m not a coder, and I’d like to ask a question:

On the github page of the project [twitterscraper] there is a code example at the end of the readme to use TwitterScraper from within python:

This is the script:

from twitterscraper import query_tweets

# All tweets matching either Trump or Clinton will be returned. You will get at

# least 10 results within the minimal possible time/number of requests

for tweet in query_tweets(“Trump OR Clinton”, 10)[:10]:

print(tweet.user.encode(‘utf-8’))

I’d like to ask what the script would be to scrape the date range example [e.g. https://twitter.com/search?q=trump%20since%3A2017-01-15%20until%3A2017-01-31&lang=en ]

If someone could answer, I’d really appreciate it. I’m in the middle of my thesis, and this could potentially save me a lot of time and measure a lot more datapointa.

Thanks again!

Hi Leo,

running twitterscraper with “twitterscraper q=trump%20since%3A2017-01-15%20until%3A2017-01-31&lang=en -o tweets.json” from the command line, should output the desired tweets in the indicated file.

Good luck with your thesis!

well i am getting RecursionError { Reason: ‘RecursionError(‘maximum recursion depth exceeded in comparison’,). How to solve this recursion error in this application?

Could you open up an issue on the GitHub page with a full description of the error?

Great post. Thanks a lot.

I need to collect some more fields, e.g. number of retweets for a tweet, total number of tweets of that user etc. How can I modify the code to do these?

How can i save the tweets data in SQL Server rather than in json file? Also how can i get the data about Likes,Retweets and comment ?

The latest version of twitterscraper on GitHub should also be able to scrape information about the retweets, likes, favs etc

Is it possible to access all activity such as tweets ,comments,likes etc for a single user. Let’s say for public profiles , and public activity only ?

Twitter Scrapper does not work presumably due to a change in Twitter. Appreciate if you provide a solution. Thanks much Ahmet for all your great work.