In the previous post we have learned how to do basic Sentiment Analysis with the bag-of-words technique. Here is a short summary:

- To keep track of the number of occurences of each word, we tokenize the text and add each word to a single list. Then by using a Counter element we can keep track of the number of occurences.

- We can make a DataFrame containing the class probabilities of each word by adding each word to the DataFrame as we encounter it and dividing it by the total number of occurences afterwards.

- Sorting this DataFrame by the values in the columns of the Positive or Negative class, then taking the top 100 / 200 words we can construct a list containing negative or positive words.

- These words in this constructed Sentiment Lexicon can be used to give a value to the subjectivity of the reviews in the test set.

Using the steps described above, we were able to determine the subjectivity of reviews in the test set with an accuracy (F-score) of ~60%.

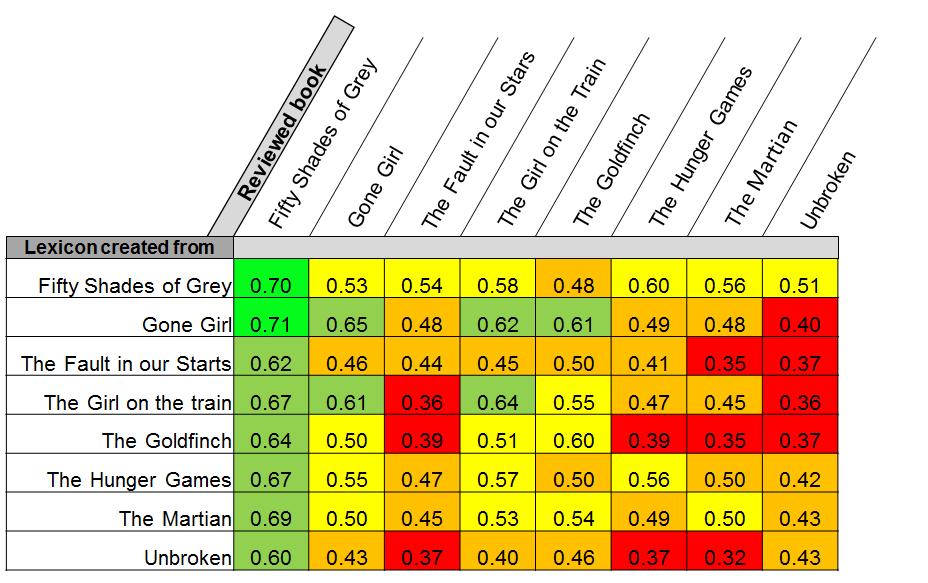

In this blog we will look into the effectiveness of cross-book sentiment lexicons; how well does a sentiment lexicon made from book A perform at sentiment analysis of book B?

We will also see how we can improve the bag-of-words technique by including n-gram features in the bag-of-words.

Cross-book sentiment lexicons

In the previous post, we have seen that the sentiment of reviews in the test-set of ‘Gone Girl’ could be predicted with a 60% accuracy. How well does the sentiment lexicon derived from the training set of book A perform at deducing the sentiment of reviews in the test set of book B?

In the table above, we can see that the most effective Sentiment Lexicons are created from books with a large amount of Positive ánd Negative reviews. In the previous post we saw that Fifty Shades of Grey has a large amount of negative reviews. This makes it a good book to construct an effective Sentiment Lexicon from. Other books have a lot of positive reviews but only a few negative ones. The Sentiment Lexicon constructed from these books has a high accuracy in determining the sentiment of positive reviews, but a low accuracy for negative reviews… bringing the average down.

Improving the bag-of-words with n-gram features

In the previous blog-post we had constructed a bag-of-words model with unigram features. Meaning that we split the entire text in single words and count the occurence of each word. Such a model does not take the position of each word in the sentence, its context and the grammar into account. That is why, the bag-of-words model has a low accuracy in detecting the sentiment of a text document. For example, with the bag-of-words model the following two sentences will be given the same score: 1. “This is not a good book” –> 0 + 0 + 0 + 0 + 1 + 0 –> positive 2. “This is a very good book” –> 0 + 0 +0 +0 +1 + 0 –> positive

If we include features consisting of two or three words, this problem can be avoided; “not good” and “very good” will be two different features with different subjectivity scores. The biggest reason why bigram or trigram features are not used more often is that the number of possible combinations of words increases exponentially with the number of words. Theoretically, a document with 2.000 words can have 2.000 possible unigram features, 40.000 possible bigram features and 8.000.000.000 possible trigram features.

However, if we consider this problem from a pragmatic point of view we can say that most of the combinations of words which can be made, are grammatically not possible, or do not occur with a significant amount and hence don’t need to be taken into account.

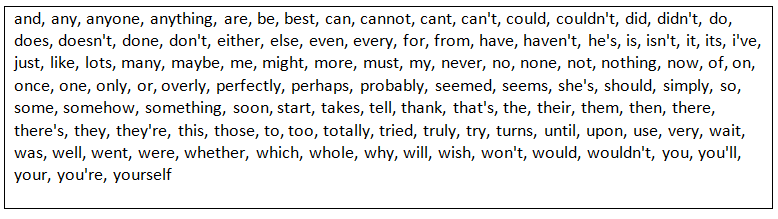

Actually, we only need to define a small set of words (prepisitions, conjunctions, interjections etc) of which we know it changes the meaning of the words following it and/or the rest of the sentence. I we encounter such a ‘ngram word’, we do not split the sentence but split it after the next word. In this way we will construct ngram features consisting of the specified words and the words directly following them. Some examples of such words are:

In the previous post, we had seen that the code to construct a DataFrame containing the class probabilities of words in the training set is:

from sets import Set import pandas as pd

BOW_df = pd.DataFrame(0, columns=scores, index=’’) words_set = Set() for review in training_set: score = review[‘score’] text = review[‘review_text’] splitted_text = split_text(text) for word in splitted_text: if word not in words_set: words_set.add(word) BOW_df.loc[word] = [0,0,0,0,0] BOW_df.ix[word][score] += 1 else: BOW_df.ix[word][score] += 1

If we also want to include ngrams in this class probability DataFrame, we need to include a function which generates n-grams from the splitted text and the list of specified ngram words:

(…) splitted_text = split_text(‘text’) text_with_ngrams = generate_ngrams(splitted_text, ngram_words) for word in text_with_ngrams: (…)

There are a few conditions this “generate_ngrams” function needs to fulfill:

- When it iterates through the splitted text and encounters a ngram-word, it needs to concatenate this word with the next word. So [“I”,”do”,”not”,”recommend”,”this”,”book”] needs to become [“I”, “do”, “not recommend”, “this”, “book”]. At the same time it needs to skip the next iteration so the next word does not appear two times.

- It needs to be recursive: we might encounter multiple ngram words in a row. Then all of the words needs to be concatenated into a single ngram. So [“This”,”is”,”a”,”very”,”very”, “good”,”book”] needs to be concatenated in [“This”,”is”,”a”,”very very good”, “book”]. If n words are concatenated together into a single n-gram, the next n iterations need to be skipped.

- In addition to concatenating words with the words following it, it might also be interesting if we concatenating it with the word preceding it. For example, forming n-grams including the word “book” and its preceding words leads to features like “worst book”, “best book”, “fascinating book” etc…

Now that we know this information, lets have a look at the code:

def generate_ngrams(text, ngram_words): new_text = [] index = 0 while index < len(text): [new_word, new_index] = concatenate_words(index, text, ngram_words) new_text.append(new_word) index = new_index+1 if index!= new_index else index+1 return new_text

def concatenate_words(index, text, ngram_words): word = text[index] if index == len(text)-1: return word, index if word in bigram_array: [word_new, new_index] = concatenate_words(index+1, text, ngram_words) word = word + ‘ ‘ + word_new index = new_index return word, index

Here concatenate_words is a recursive function which either returns the word at the index position in the array, or the word concatenated with the next word. It also return the index so we know how many iterations need to be skipped.

This function will also work if we want to append words to its previous words. Then we simply need to pass the reversed text to it text = list(reversed(text)) and concatenate it in reversed order: word = word_new + ' ' + word.

We can put this information together in a single function, which can either concatenate with the next word or with the previous word, depending on the value of the parameter ‘forward’:

def generate_ngrams(text, ngram_words, forward = True): new_text = [] index = 0 if not forward: text = list(reversed(text)) while index < len(text): [new_word, new_index] = concatenate_words(index, text, ngram_words, forward) new_text.append(new_word) index = new_index+1 if index!= new_index else index+1 if not forward: return list(reversed(new_text)) return new_text

def concatenate_words(index, text, ngram_words, forward): words = text[index] if index == len(text)-1: return words, index if words.split(‘ ‘)[0] in bigram_array: [new_word, new_index] = concatenate_words(index+1, text, ngram_words, forward) if forward: words = words + ‘ ‘ + new_word else: words = new_word + ‘ ‘ + words index = index_new return words, index

Using this simple function to concatenate words in order to form n-grams, will lead to features which strongly correlate with a specific (Negative/Positive) class like ‘highly recommend’, ‘best book’ or even ‘couldn’t put it down’.

What to expect:

Now that we have a better understanding of Text Classification terms like bag-of-words, features and n-grams, we can start using Classifiers for Sentiment Analysis. Think of Naive Bayes, Maximum Entropy and SVM.